The Guide To Analytic Hierarchy Process

- What is Analytic Hierarchy Process

- AHP Applications

- How AHP works

- When (Not) to Use Pairwise Comparisons

- 3 Steps to Reduce the Number of Comparisons

- How to Deal with Inconsistent Comparisons

- Key features of AHP software

- Video Guide: Case Study on Selecting a Data Warehouse Solution

- Summary

What is Analytic Hierarchy Process?

The Analytic Hierarchy Process (AHP) is a structured and transparent way of making decisions. With AHP your decision becomes a step-by-step process, which simplifies decision-making, enables collaboration and improves the quality of decisions.

AHP was developed in 1970s. Since then, our knowledge on good decision-making increased significantly and scientists have developed lots of new decision making methodologies. This section contains a few reasons why AHP is still a good choice for making collaborative decisions.

AHP is Well-proven

For the last 30-35 years AHP has been thoroughly tested by thousands of organizations from around the globe… and it seems to work!

There are lots of case studies that describe how large organizations used AHP for their strategic decisions to achieve better outcomes.

AHP Helps reduce decision bias

When you make collaborative decisions your stakeholders don’t all agree on which goals are most important. They may not even agree on what the goals really are!

To make matters worse, each individual is, let’s be frank, human. Humans are poor at making rational decisions that involve multiple “dimensions.” As soon as you come up against a decision with more than 2 or 3 “dimensions”, the subconscious kicks in and that’s when the problems really start. Biases will influence the decision without the decision-maker even being aware of it.

AHP helps address this. It structures the decision in a way that helps reduce decision bias, that ensures every voice is heard and that actively builds consensus between your stakeholders. The result, which has been validated by researchers around the world, is better decisions with a strong commitment to action from your stakeholders.

AHP is Intuitive and easy to use

People don’t feel comfortable taking recommendations from methods if they don’t understand how it works. They don’t need to know the details but they want to understand the idea behind it.

For AHP, you break a complex decision into explicit goals, alternatives and criteria. You prioritize criteria and evaluate alternatives in light of those criteria. People get it.

In contrast, some more recently developed methodologies have a process and underlying math that is so complex that the software becomes a “mathematical black box”. It takes your input and returns recommendations. What happens in between is a mystery (at least for a regular human being) and that undermines trust. And trust is really important when it comes to the final outcome.

Using Analytic Hierarchy Process for your collaborative decision making works because, with AHP, you can explain how it works.

AHP is Designed for multi-criteria

When you make important decisions, there are always conflicts between criteria. For example, “minimizing price” and “maximizing quality” are often contradictory goals. This is made worse when you're working in a team. Collaborative decision making, by definition, means people have different views and priorities.

Decision making best practice involves taking into account all important criteria. However, this "best practice" is often ignored as multi-criteria analysis is much more challenging than, say, making a decision based on just the price.

Analytic Hierarchy Process allows you to take into account all important criteria and to organize them into a hierarchy.

AHP builds alignment around criteria priorities

Some decision-making methodologies ask participants to assign weights to criteria. The question is, "How should I set those weights?" Well, however Fred in Finance does it, Sally in Sales will disagree.

Participants always have different priorities and without a supporting process, it is hard to reach consensus. Some methodologies are missing this key mechanism. It leaves your group with an unresolved problem.

And then the very smart quants dive in. They've developed another group of methods where weight of criterion is not a value but function. From theoretical point of view this might be a good concept but it is usually very hard to use for real collaboration.

If it is hard to agree on a value, and people need sensitivity analysis to check different scenarios, it will be much harder to agree on a function with a weird shape. How was the function developed? Shouldn't it be a bit different? Remember what I wrote about “mathematical black boxes”? This is another. And it's a Pandora's box. I wouldn't recommend you open it unless you want to spend a lot of time with equations and whiteboards.

In AHP, setting priorities is resolved with pairwise comparisons.

- Each participant is individually asked, "Is A or B more important, and by how much?"

- After that, you review comparisons together, discuss and try to reach consensus on their values.

- If consensus is not possible you can use average value and move forward.

- Then, your collaboratively-agreed comparisons are translated into weights by an algorithm.

This is a process that is centered on collaboration and which has a mechanism that helps to eliminate deadlocks.

AHP Validates consistency

We are all humans and it's our prerogative to be inconsistent sometimes. We make mistakes. When we make collaborative decisions there are multiple people that can make mistakes and be inconsistent. Is there any wonder so many group decisions end in deadlock and confusion?

Fortunately, AHP can eliminate some of these problems. You deliver redundant data (more than needed) and an algorithm checks to see if your input is consistent.

Good supporting software can then identify these inconsistencies and help you address the ones that need to be addressed.

AHP Applications

When you look into the case studies you will notice that it was used for variety of decision making problems. There are AHP applications related to project prioritization, vendor selection, technology selection, site selection, hiring decision and more...

Having one good approach lets good decision making become part of your everyday processes; part of your culture.

Strategic alignment

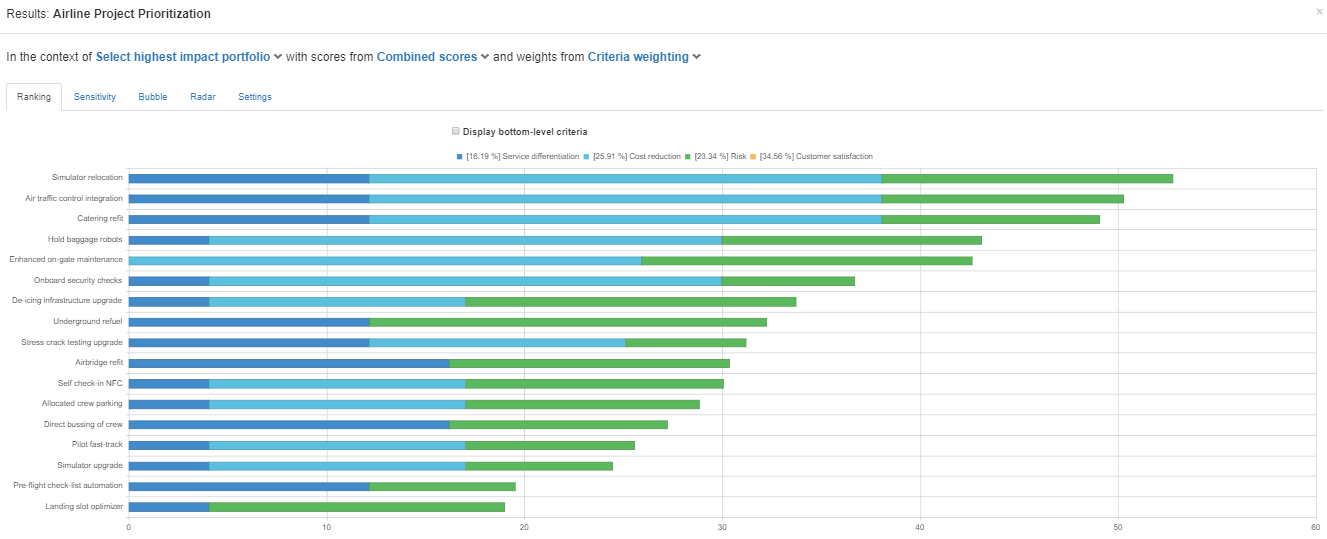

AHP lets your capture your strategic goals as a set of weighted criteria that you then use to score projects. It’s the “how” that matters - AHP structures the collaboration between your different stakeholders (executives, business unit heads, subject matter experts, etc.) and uses decision science to improve both the quality of and the buy-in to your decision.

The result is a ranked list of projects - each project having a score between 0 and 100 - that you can use to drive your project selection and resource allocation decisions.

This score represents how well your projects are aligned to your strategic goals and projects that are aligned with strategic goals are 57% more likely to achieve their business goals.

Read more: Strategic alignment

Project prioritization

For decades, researchers have been inventing and evaluating different methods of prioritizing projects and, so far, the Analytic Hierarchy Process is the winner.

For decades, researchers have been inventing and evaluating different methods of prioritizing projects and, so far, the Analytic Hierarchy Process is the winner.

Project prioritization is a difficult problem. You have a diverse set of stakeholders who want to “get projects done”. You also have a finite set of resources to deliver those projects. There are never enough resources to satisfy everyone’s needs.

Your projects are the mechanism to achieve your strategic goals and picking the wrong projects, therefore, means your organizational performance suffers.

Research carried out in business schools and departments of engineering, in psychology labs and in operations research groups right around the world has invented, refined and evaluated over 100 methods of prioritizing and selecting projects. In 2017, the University of New South Wales published a review of all of this work.

The result is that only two methods of prioritizing projects were found to be suitable, and the one that was deemed to be most usable is the Analytic Hierarchy Process.

Read more: Why AHP works for prioritization

Making other decisions

Of course, AHP is not only used in project prioritization. Some of the most common decisions made using AHP include:

- Procurement

- Site selection (e.g. “Where should we build our new warehouse / road / airport?”)

- Technology selection

- Strategy development

- Evaluating different design options

- Stakeholder engagement (e.g. to determine product roadmap, to select the location of an airport or to help develop government policy)

- Trade studies

- Hiring

In fact, the analytic hierarchy process is a great method to use any time one or more of these conditions is met:

- Your decision has multiple stakeholders

- Your decision is long-lasting

- Your decision has a significant impact on your organization

- Your decision involves a large expenditure of money or resources

- You need to “prove” you made a good decision

Read more: AHP for collaborative decision-making

How AHP works

In concept, AHP is very simple. You define and evaluate a set of business goals which translate into weighted criteria. You score each project against those criteria and calculate a weighted score for each project. The process is similar to a weighted score from a spreadsheet or a prioritization matrix, but with a couple of important differences.

It’s HOW you do the weighting and scoring that differentiates AHP-based project prioritization.

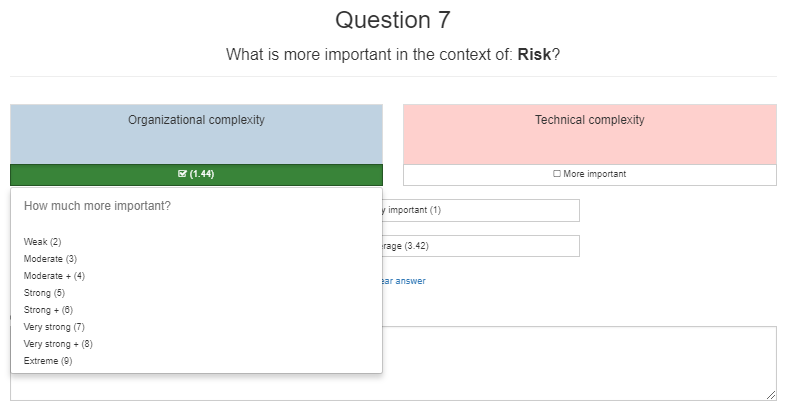

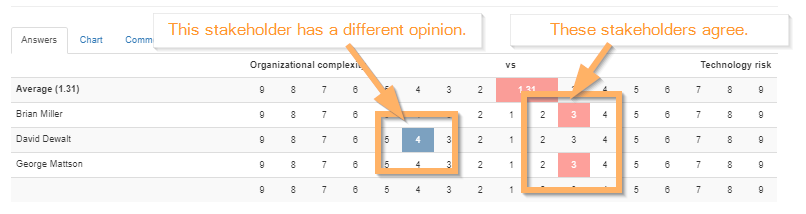

The most important difference between AHP and other methods is the way in which you weight your criteria. AHP breaks this question up into a number of small judgments. You are shown pairs of criteria and then tell the software which criterion is more important (and by how much).

This simple process helps reduce bias because each judgement is “small enough” for you to make it rationally, without relying on the (biased) subconscious.

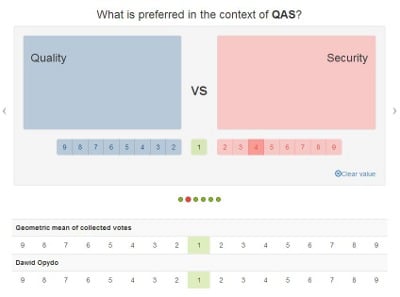

Crucially, AHP is also a team sport. Your various stakeholders get to vote on which criterion is more important. Sometimes there will be good alignment, but other times, such as in the following image, the stakeholders disagree.

Identifying the differences of opinion allows you to have a quick discussion about why your stakeholders disagree. This process encourages your stakeholders to listen to each other and to learn about other teams’ needs. It means the issue is viewed from a number of different directions, reducing any individual’s bias.

The “loudest voice” no longer wins just because it is the loudest voice.

When the group comes to agreement on what the overall vote should be, everyone understands where this vote came from and, typically, the stakeholders walk away from this discussion with a strong sense of ownership.

When you’ve going through all the pairs of criteria, AHP software like TransparentChoice will find the best fit to your data and will calculate the weighting for your criteria.

Now you’re ready to start, scoring your projects against the criteria. This should also be a team sport.

Good AHP software lets you bring together different opinions on how a project might, for example, improve customer retention. Crucially, different perspectives help improve the quality of the decision, but they also help build buy-in and trust in the scores you finally calculate.

Which, of course, is the next step. Combining the weighted criteria with the scores for each project gives you a weighted score for each project. You should end up with something like this; a ranked list of projects that you can use to make project selection and resource allocation decisions.

When (Not) to Use Pairwise Comparisons

Analytic Hierarchy Process without pairwise comparisons? Are you crazy? Is this even possible?

Well, pairwise comparisons are the core mechanism of AHP, the factor that makes it easy to use, and main reason why people love AHP. Yet people sometimes get so wrapped up in pairwise comparison and its elegance that they don’t realize that it sometimes gets in the way. Sometimes, scales are better. So we wrote this section to help you understand when to use each.

So, let’s get it out of the way. The General Rules for using pairwise comparisons, the rules that, if you follow them, you will get it right 95% of the time are…

Rule 1: Use pairwise comparisons to prioritize criteria

Criteria are things that, by definition, can’t be measured on the same scale. You can’t come up with a scale that me asures risk and cost – they are just different.

Criteria are things that, by definition, can’t be measured on the same scale. You can’t come up with a scale that me asures risk and cost – they are just different.

But you still have to prioritize your criteria and this is where pairwise comparison comes in. Simple questions like, "Is risk or cost more important" are a great way to unpick the complexity of a multi-criterion decision and AHP gives us the tools to put the whole picture back together again.

Rule 2: Use scales to score alternatives

Most people are usually fine with Rule 1. It makes sense, but they often get confused when looking at alternatives. They want to use pairwise comparisons to compare, say, different projects. This seems to make sense because the projects can be so different in nature as to be incomparable.

But here’s the thing. You are using criteria to assess the projects, and each criterion implies something you can measure, something you can directly compare between alternatives. For example, you might be trying to choose between a new car and a new pool, things that are totally different. Even so, you can still have measurable criteria like, "Cost" and "How frequently will I use it?" and "Will it impress my neighbors?"

But here’s the thing. You are using criteria to assess the projects, and each criterion implies something you can measure, something you can directly compare between alternatives. For example, you might be trying to choose between a new car and a new pool, things that are totally different. Even so, you can still have measurable criteria like, "Cost" and "How frequently will I use it?" and "Will it impress my neighbors?"

Even though a car and a pool are totally different things, you can measure them on a specific scale for each criterion. You will use a car every day, all year round whereas you might only use a pool twice a year. So even though my alternatives are very different, they are easy to compare "like for like" for one criterion if you have a good scale. This helps you be objective and precise.

Oh, and if you can’t think of a good scale for your criterion, you might wonder if you’re at the bottom of the criterion tree, or is your criterion really made up of sub-criteria.

Rule 3: Breaking the rules

Like all good rules, of course, these rules should, sometimes, be broken. If you’re tempted to use pairwise comparisons to score alternatives, which is a very common urge, go through this checklist of questions – they will help you decide whether or not you can break the rules. In most cases, you’ll realize that scales will work better.

Q1. Are there fewer than 9 alternatives?

Don’t use pairwise comparison to score alternatives if there are more than about 9 alternatives. There are 3 main problems you will hit using pairwise comparisons to evaluate large number of alternatives:

It is time consuming. The more alternatives, the more comparisons. When you have multiple evaluators all of them need to provide judgments. Also, in group decisions, it will take much more time to build consensus. Pairwise comparisons for large set of alternatives might simply be too time consuming. But even if you did spend the time, there are still other problems…

Problems with consistency. With a large set of comparisons, it can be difficult to be consistent. With fewer alternatives, you are likely to be more consistent and, frankly, it can take a long time to identify and fix all the inconsistencies.

If you are inconsistent, TransparentChoice will identify that for you… but then what? Are you really going to spend all that time trying to tweak your answers to be consistent? Probably not. But even if you do complete comparisons with sufficient consistency, there is another problem…

Small differences in final scores. Pairwise comparison is way of working out how to "share out the pie". If A is more important than B, A will score higher than B. But if you have all the options, A through Z, then the pie has to be shared amongst 26 options. Those are small slices of pie and small changes in the pairwise comparison can lead to changes in ranking.

There are a couple of ways, in AHP theory land, to improve matters, but they do not fundamentally get around the pie problem. But scales do. Each alternative gets the score it deserves regardless of how many other alternatives there are.

NOTE: You will not have these problems with criteria. If you have more than 9 criteria, they will probably be organized as a hierarchy with some criteria sitting “under” others. Since you only compare criteria at the same level of the same branch, you rarely get a situation where you are trying to compare 9 criteria with each other.

Q2. Do you want to be able to score “0”?

If you want to be able to give something a score of zero, don’t use pairwise comparison. For example, if you have a scale for your Customer Support criterion, you might have 2=great, 1=limited support, 0=no support. There is no way, using pairwise comparison, to get that zero, so if you want to be able to use zero, use a scale.

The reason you can’t have zero is simple. Pairwise comparison asks, “Do you prefer option A or option B” and if you score a 3 in favor of option B, that means you like B thrice as much as A. This means that option B would carry 75% of the weight and A would carry 25%. If you score a 9 in favor of B (the strongest preference available) then B would carry 90% of the weight and A would carry just 10%... but 10% is not zero.

NOTE: You won’t have this problem with criteria. If criterion would have zero weight, well, you just delete it.

Q3. Will you want to add more alternatives later?

In some decisions, you might gradually add alternatives, or make a preliminary decision and then add other alternatives later. Project prioritization often works like that. If this is the case for your decision, use scales, not pairwise comparisons, to score your alternatives. Here’s why.

You need to compare a new alternative against all the old alternatives. If you use pairwise comparisons, as you add new alternatives, you need to “test” that new alternative against all the others. This isn’t just a logistical pain, it also means that your new judgments are being made at a different time and with different knowledge than the first set of judgments. This, in turn, means you’re looking through a different set of filters and will make different tradeoffs leading to less coherent conclusions.

You will affect ranking. Pairwise comparisons are like football leagues – if you add new teams, or remove existing teams – the whole situation in the league might change. The new team will influence the points collected by all the other teams in matches and… well, the table is likely to change.

Using scales eliminates both of these problems. Scales are “unambiguous” and don’t change over time. Similarly, if you use scales, the score of one alternative is not affected by other alternatives; you can add as many as you need.

NOTE: When you add a new criterion, it is intuitive that the final ranking will change.

Q4. Do your evaluators know enough?

Sometimes evaluators have deep knowledge on single alternative or subset of alternatives (projects, technologies, vendors, candidates) but not about all the alternatives that will be evaluated. It is hard to make pairwise comparisons in this case as you would need knowledge about all the alternatives.

In comparison, scales give you a clear yard-stick to use and different people can provide input for different alternatives (though it’s usually a good idea to have one person reviewing all of these inputs to ensure the scale is applied consistently).

NOTE: For criteria, you have to know about the different criteria and their impact on the business in order to prioritize. In fact, individuals usually don’t have perfect knowledge of all criteria, but a team will typically work this out at the consensus-building stage.

3 Steps to Reduce the Number of Comparisons

"Analytic Hierarchy Process is so time consuming! I don’t have time to make all these comparisons. I can’t expect my colleagues to spend so much time making judgments. Is there a way to make it quicker, please?"

No worries. Help is on the way.

Usually, when we hear this plea for help, it’s because people have misunderstood how to get the most from AHP. This section will give you 3 easy steps to make your problem disappear. Oh, and they will probably also improve the quality of your decision.

So read on…

Step 1. Replace pairwise comparisons with scales

This is the first step, where you can usually eliminate most of comparisons. Follow the best practices on where to (not) use pairwise comparisons. The main rule is to not use pairwise comparisons to score alternatives, especially if their number is bigger than 9.

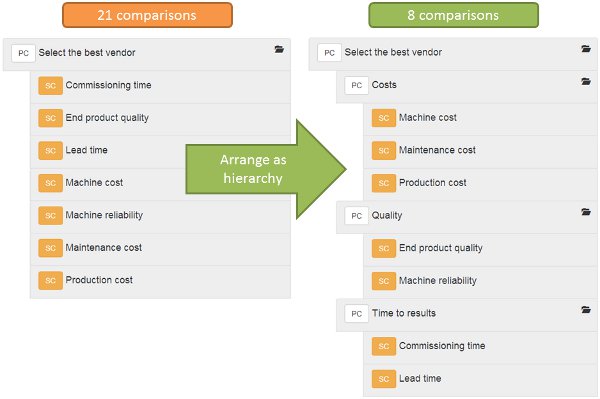

Step 2. Organize your criteria into hierarchy

Beginners tend to use flat lists of criteria instead of hierarchies. If you have lots of criteria, you will have a lot of pairwise comparisons to make. Think how to group your criteria together. For example, you might have “Machine Cost”, “Maintenance Cost” and “Production Cost” as criteria, but these should really be sub-criteria under an overall “Costs” criterion.

This not only reduces the number of comparisons you need to make, but it also improves the quality of the decision by focusing discussions on “big tradeoffs” (like Costs vs. Quality) before you work out “how to calculate score for Costs”.

The following example shows the dramatic reduction in comparisons you can achieve simply by organizing your criteria into a hierarchy.

Step 3. Use AHP software that can complete your comparisons

Okay, so you’ve implemented scales and you’ve organized your criteria into a hierarchy and you’ve still got 10 sub-criteria on the same level meaning you need make 45 comparisons. This doesn’t happen often, by the way, but it could… so what do you do?

Well, TransparentChoice has the power to read your mind.

Not really, but it can extrapolate all those judgments from a much small number of comparisons. So, if you have 10 sub-criteria, you could just input 9 judgments and let TransparentChoice extrapolate the rest. The only problem is that the quality of the data will be low. The more comparisons you do, the more TransparentChoice can check for consistency between your answers and improve the quality of the result.

As with so many things in life, it’s a tradeoff. In the number of things you are comparing is small, say up to 6 or 7, then it’s probably worth going through all of the judgments. If, however, you are comparing a larger number of judgments, you may want to trade quality for speed.

GEEK CORNER. If you’re interested in how the trade-off works, let’s look at how the software extrapolates judgments based on your inputs.

TransparentChoice analyzes the comparisons you provide and completes the missing comparisons with values that are most consistent with your input. For example, you enter comparisons A = 2xB; B = 4xC; so the algorithm can easily predict that A = 8xC.

Now this is fine if you really believe that A = 8xC, but let’s pretend for a moment that your first judgment was a mistake – you really meant that B = 2xA (your preference is the opposite of what you accidentally entered). If you stop entering judgments before the software asks you to compare A and C, you will never know you made a mistake and your results will be based on this mistake.

If, however, you do go on and compare A and C, you’d probably enter something like A = 2xC and TransparentChoice will flag up a whopping inconsistency for you. This gives you a chance to check your answers and increase the quality of your input.

Example

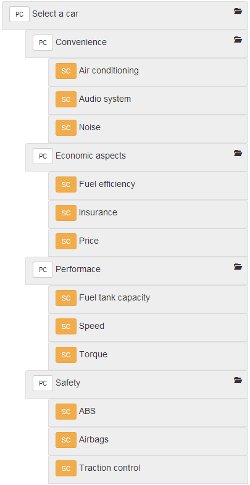

We will use a “car selection” example to show you the process of reducing the number of comparisons you need to make.

Imagine that you are trying to choose a car. There are 6 alternatives and 12 criteria. Let’s assume you start, as beginners often do, with a flat list of criteria (all criteria on the same level of hierarchy) and use pairwise comparisons everywhere in your project (to prioritize criteria and to score alternatives).

Let’s count how many comparisons you would need to make to complete the process. The number of comparisons in given context (e.g. comparing criteria at the same level of a branch in a hierarchy, or comparing alternatives against a criterion) can be calculated as n*(n-1)/2, where n is number of items to compare. You need to:

- Prioritize 12 criteria in context of the overall goal = 66 comparisons

- Score 6 alternatives in context of each of the 12 criteria = 180 comparisons

So, in this project, the total number of comparisons would be 66 + 180 = 246

Wow, that’s a lot of time spent inputting judgments.

And if this is a collaborative decision, where multiple people need to provide input, the time spent banging numbers into TransparentChoice gets silly.

But 6 alternatives and 12 criteria is still quite a small project… so let’s apply our three steps.

Step 1. Replace pairwise comparisons with custom scales at the bottom of the hierarchy

Effect: 180 comparisons can be replaced by 72 scores.

Step 2. Organize the criteria into a hierarchy

Let’s say that our 12 criteria are: price, fuel efficiency, insurance, airbags, traction control, ABS, noise, speed, fuel tank capacity, torque, audio system, air conditioning.

We will organize them into hierarchy by introducing 4 higher level criteria: convenience, economic aspects, performance, safety.

Let’s count comparisons again:

Prioritize 4 higher level criteria in context of goal = 6 comparisons

Prioritize 4 sets of 3 sub-criteria = 12 comparisons

Effect: Ok, so now, we can prioritize criteria with 18 comparisons instead of 66.

Step 3. Use software to complete comparisons

If we are desperate, we could even let TransparentChoice extrapolate some of the results. The minimum number of comparisons you could make is:

Prioritize 4 higher level criteria = 3 comparisons

Prioritize 4 sets of 3 sub-criteria = 8 comparisons

Effect: we reduced number of comparisons needed to prioritize criteria from 18 to 11. Given the small saving here, we’d recommend not bothering with step 3: live with 7 extra judgments and be sure your data are consistent.

In summary we replaced 246 comparisons with 11 comparisons and 72 scores on scale.

Beginners often lose enthusiasm because they are faced with so many pairwise comparisons. Usually, this is because they’ve made a couple of basic mistakes; not using scales to score alternatives and having a flat hierarchy.

Usually, fixing these two errors will solve the problem, but if not, you can simply enter less data and let TransparentChoice do the rest. But there’s a tradeoff in decision quality.

How to Deal with Inconsistent Comparisons

“I am depressed. I entered my pairwise comparisons but the AHP software claims that they are inconsistent. I have no idea what this means. I was trying to change some comparisons but I think I messed it up even more. Please help me. I know Analytic Hierarchy Process is a powerful tool, but right now, I’m in a real mess!”

If you have ever felt this way, this section is for you. You will find here tips on how to deal with inconsistent comparisons. Don’t worry, it’s not difficult.

Ok, first things first…

What is this “inconsistency”?

Imagine that the software asks you to enter 3 comparisons. You answer that:

A = 3*B

B = 2*C

You will be totally consistent only if you answer that A = 6*C. Any other value will be inconsistent (a little, if you say that A = 5*C but more if you say that A = C).

In our simple example, you only had three things you were comparing. In most cases, you will have more than three comparisons and there is bound to be some degree of inconsistency.

What causes inconsistency?

Various factors can contribute to inconsistency in pairwise comparisons. Mistakes, lack of knowledge, human nature, the discrete scale used in AHP, and the capped scale for assigning values can all introduce inconsistency. Understanding these causes is crucial for improving the reliability of your decision-making process.

- Mistakes. A simple lack of concentration, or pressing a wrong button can introduce inconsistency. We are human and it is easy to make mistakes when you enter several comparisons.

- Lack of knowledge. When we don’t have enough information to make consistent comparisons, or we are uncertain. In this case we use our judgment, and our judgment is sometimes not as accurate as we’d like.

- Human nature. We are human beings, we are inconsistent. This isn’t a problem, so long as we’re not too inconsistent.

- Discrete scale. In AHP, we do pairwise comparisons using a special scale which contains the values 1 to 9. This can introduce inconsistency. Imagine that you answer that A=2*B and A=3*C. The totally consistent value for comparison B vs C is B = 1.5*C, but since 1.5 is not on the scale, you must be inconsistent. This will only have a marginal effect on the total consistency, especially if the number of comparisons is not very large. Sometimes you simply don’t have a chance to be totally consistent…

- Capped scale. Imagine that you answer that A=4*B and B=5*C. To be consistent you should answer that A=20*C but maximal value that you can use is 9. Again, the AHP methodology forces you to be inconsistent.

To effectively address the issue of inconsistency in your comparisons, it's essential to consider the impact of noise in decision-making. Discover how noise can undermine the consistency of your decisions and gain insights into strategies for mitigating its effects by reading our article on noise in decision-making.

How to resolve problems with consistency?

- Avoid more than 9 elements to compare. It is easier to be consistent on smaller set of comparisons, and the masses of research into AHP suggests that you should try not to compare more than 9 items using pairwise comparisons.

- Proper hierarchy. Don’t compare elements that are extremely different in priority/weight. Remember that 9 is maximum on the scale and it should be enough. If one item is more than 9x as important as another, they probably don’t belong on the same level of your hierarchy.

- Don’t overuse large values. If you overuse large scores – you will have problems with capped scale. Remember, if you select a score of 9, you are saying that A is 9x as important as B.

- Eliminate contradictions. There are some obvious contradictions that you might make. For example, if you say that A > B > C > A… well, there’s a contradiction that needs to be sorted out. TransparentChoice helps by alerting you (red circle below the contradictory judgment). Simply go back and look at your judgment to resolve the inconsistency.

- Reduce the inconsistency of your comparisons. TransparentChoice, and the AHP methodology it is based on, has a mechanism to measure consistency. It is called consistency ratio (CR) and as long as this is < 10%, you don’t usually have to worry.

Of course, doesn’t mean that 11% is always unacceptable. If CR > 10% software will help by giving you a warning and it will indicate comparisons that seem most inconsistent. If you review those judgments you will often find that the score is not really what it should be.

If your consistency ratio is still higher than 10%, the next two most inconsistent judgments will be flagged up for you to look at. Simply repeat this process until you’re happy with your judgments, or until you get no more orange flags.

Key features of AHP Software

Analytic Hierarchy Process is intuitive and easy to use. However, if you try to use it without dedicated software, the underlying math can be challenging, especially as the number of participants, alternatives and criteria increases.

During the last few years we were engaged in developing a number of AHP-based decision making software packages, including:

- AHP project which was used by thousands of users from around the globe. It was available for free and I think it might have been one of the most widely used decision-making packages ever. Actually, its popularity combined with a “free” pricing killed it – we simply didn’t have enough resources to support it.

- MakeItRational which was very popular, especially the “Desktop version.” MakeItRational Desktop delivers a unique way of supporting offline collaboration behind a firewall, which allows you to collect votes from evaluators without sending data to external servers. This level of data security is something our defense-sector customers, among others, love! [MakeItRational was discontinued because it was built in discontinued application framework - Microsoft Silverlight]

- TransparentChoice – the latest and the most advanced AHP software.

Each project brought us tons of feedback regarding users’ needs. In this article I collected features that I believe are “must-haves” for any effective AHP-based decision making software. AHP software should be much more than just AHP-math calculator.

1. Managing criteria hierarchy

Building the criteria hierarchy might be a challenge. Before your hierarchy is ready to use you will need probably to reorganize it a couple of times as the team works through the "creative" process.

Things like “drag and drop” are essential here. Of course, you can draw your hierarchy on a piece of paper and then model it with software but what if you need to change it later?

Sometimes participants report new criteria during evaluation. Some software will not handle this very well, losing data or forcing you to restart the data collection process.

We sometimes hear of users leaving important criteria out simply because they can't face "restarting" their project. Your software should, in fact, support your changes easily and should carry your already-collected judgments across seamlessly.

2. Reducing the number of pairwise comparisons

Pairwise comparisons are one of the most important features of AHP. This is where you break down complex decision into small judgments like "Is Quality more important than Security, and by how much?"

Pairwise comparisons are one of the most important features of AHP. This is where you break down complex decision into small judgments like "Is Quality more important than Security, and by how much?"

However, when the number of comparisons is large, it is time consuming to provide comparisons. AHP software should help you to reduce that number.

The total number of comparisons can be calculated as n(n-1)/2 (where n is number of compared elements). To get meaningful answers from AHP, however, this number can be reduced as low as n-1.

So, for example, if you compare 10 elements:

Total number of comparisons: 10x(10-1)/2 = 45

Minimal number: (10-1) = 9.

You get 9 comparisons instead of 45. It makes a difference: if each judgment takes 1 minute, you just saved over half-an-hour for each participant in your project - nice job! But it's not quite that simple - see the note on consistency below.

AHP software should help you to provide minimum data needed to perform calculations in the shortest time.

Please remember that redundant comparisons allow to check consistency. The more comparisons you provide the more reliable consistency check you can perform.

If you limit the number of comparisons to the absolute minimum, you won't be able to check consistency.

3. Consistency checking

When you, or your evaluators, provide tens of pairwise comparisons, you won’t be totally consistent. AHP software should check the consistency of entered data and warn if the inconsistency is too high.

Often your evaluators will not be familiar with AHP. They (and everyone else) will need something more than just a “you are inconsistent – fix it” message. What can be of help here:

- Inconsistency metric. It is good to know if your adjustments improve consistency or make it worse. You change values of comparisons and observe inconsistency metric. If it is decreasing you know you are on the right way.

- Identification of the most inconsistent comparisons. Imagine that you have provided 45 comparisons and got an inconsistency warning. You want to fix it but where to start? Software should be able to suggest a starting point – the most inconsistent comparisons.

- Identification of contradictions. There might be some comparisons that are not just inconsistent, but are downright contradictory. In most cases, these are errors that have to be fixed but are very hard to find without support from software.

-

Information on how to resolve inconsistency. It is possible to guide an evaluator on how to modify comparisons in order to improve consistency by "suggesting an answer". However, this is a problem if people overuse suggestions instead of rethinking their judgments. In other words, you don't want people relying on the suggestions of the software, you want them thinking it through themselves.

4. Collaborative voting

AHP is often used for collaborative decision making. The biggest value from AHP software you get during evaluation: that is weighting criteria and "scoring" alternatives against those criteria.

Why? Well, the math behind collecting and reviewing votes is complex and there is no good workaround to do it without dedicated software.

People should be able to make their own judgments and then the software should allow team to quickly identify areas of disagreement. This process becomes unmanageable very quickly without the aid of software.

5. Sensitivity analysis

Sensitivity analysis is another important feature. Without it, you simply don’t know how stable your results are. Maybe a small change in priorities would lead to totally different result?

Why AHP-based Spreadsheets Fail

Some people try to use spreadsheets that do all the maths of AHP.

But that’s totally missing the point.

AHP is about people. It’s about collaboration. It’s about knowledge-sharing and consensus-building and a spreadsheet simply won’t do that for you. Implement AHP in a spreadsheet will often leave stakeholders confused and with a sense that this is “decision-making by numbers” and has nothing to do with the real world.

AHP-based project prioritization software is a pretty rare beast and we like to think that TransparentChoice’s software is the best. We certainly put a lot of effort into making it easy to use and to keeping the cost of ownership down.

It’s this ease of use, the visual nature of collaborative voting and the discussions that follow that transforms AHP from an exercise in math to a powerful tool to make great decisions, ones that enjoy strong buy-in.

If you’re considering using AHP to help prioritize your projects, here are 5 questions to ask before deciding on a project prioritization tool.

Video Guide: Case Study on Selecting a Data Warehouse Solution

In this section, we delve into a practical example that demonstrates how to use the AHP for selecting the most suitable data warehouse solution among various alternatives.

Watch the embedded video for a walk-through of the decision-making process.

- Define Gating Factors for Data Warehouse Solutions: Identify the gating factors specific to data warehouse solutions such as data security compliance, scalability, and initial vendor responsiveness. Only the alternatives that meet these essential requirements will proceed to the next phase of assessment.

- Identify Assessment Criteria for Data Warehouse Solutions: List out the criteria important for evaluating data warehouse solutions like integration capabilities, performance, cost, and customer support. Also, consider relevant sub-criteria like real-time data processing under performance.

- Assign Weights to Criteria and Sub-Criteria: Engage data management experts and stakeholders to conduct pairwise comparisons among criteria and sub-criteria. This process will determine the weights for each, reflecting their relative importance in selecting a data warehouse solution.

- Evaluate Data Warehouse Solutions Against Criteria: Ask the panel of experts to score each data warehouse solution on how well they fulfill the criteria and sub-criteria. For instance, solutions might be rated on a scale from 1 to 5 on their ability to integrate with existing data infrastructure.

- Compute Scores for Data Warehouse Solutions: Utilize the AHP methodology to aggregate the weighted scores of each alternative. This will result in a value score representing the overall suitability of each data warehouse solution according to the chosen criteria.

- Conduct Cost and Risk Analysis: For the top-scoring data warehouse solutions, conduct a detailed cost and risk analysis. Examine the total cost of ownership, including licensing fees, and evaluate risks such as vendor lock-in or compatibility issues with existing systems.

- Final Selection of the Data Warehouse Solution: Using the aggregated scores and the insights from the cost and risk analysis, make a final decision on the data warehouse solution. The selected solution should offer the best combination of features, performance, cost-effectiveness, and low risk.

This case study illustrates how AHP can be specifically adapted to make a "pick a winner" decision when selecting a data warehouse solution. The methodology's structured approach ensures that the decision is comprehensive, factoring in various criteria and expert judgment.

Summary

Good concepts stand the test of time. The ancient Greeks invented democracy, and it's still the most successful system of government. But nobody today would implement it the way they did 2,000 years ago. So it is with AHP.

Analytic Hierarchy Process is not the latest methodology but it is a well-proven process for collaborative decision making. It is one that is accessible to everyone and is affordable so that it can be used for decisions large-and-small.

Even though AHP is simple in concept, the math is time consuming, so good software will help you roll it out with confidence. AHP software can also help the consensus-building process and generates graphs and data that help get that crucial executive sign-off.